AI in science communication: help or hype?

Artificial intelligence has entered science communication – and it’s not leaving. From generating drafts to summarizing research papers, AI tools now influence how biotech marketers, scientists, and content teams write, research, and distribute information.

But the real question isn’t whether AI can write content. It’s whether that content communicates effectively to the right audience, with the right level of clarity and credibility. In this post, I’ll explore where AI adds value, where it falls short, and why human oversight is more critical than ever – especially in life science marketing and communication.

- From novelty to norm: how AI became inseparable from science comms

- Not all users are the same – what AI means for different audiences

- The freelance dilemma: what AI can do (and what it can’t)

- Three client mindsets and how they shape AI use

- The fatal flaw: content without real-life anchors

- Respect the tool, respect the task

- Let’s keep the human intelligence in science communication

From novelty to norm: how AI became inseparable from science comms

It started quietly. A few content generators here, a headline suggestion there. Now, AI tools like ChatGPT, Neuroflash, and Perplexity are part of daily workflows for biotech marketers and writers alike.

If you’re under pressure to do more with fewer resources, it’s easy to see the appeal. Large language models (LLMs) can quickly transform a white paper into a short blog post or summarize complex research into a snappy LinkedIn update. For product managers and freelance writers juggling timelines, this can mean the difference between getting content out – or not at all.

Using large language models (LLMs) to generate science content can be helpful, but it’s not without risk. These systems cannot grasp nuance and context (yet). While they can rephrase, summarize, and mimic tone, they cannot weigh scientific accuracy against commercial messaging or distinguish between regulatory red flags and stylistic preferences.

In short, AI is a tool, not a strategist. It can speed up the drafting process, but it can’t substitute for human judgment. Someone still needs to ask: Is this correct? Is it appropriate for this audience? Does it align with what we’re legally and ethically allowed to say?

In high-stakes fields such as biotechnology, where a single word can change the way something is interpreted, that level of expert oversight isn’t optional – it’s essential.

Not all users are the same – what AI means for different audiences

The impact of AI in science communication isn’t uniform. It depends entirely on who’s using it and for what purpose. The expectations and risks vary widely across different audience segments in the life science ecosystem.

For biotech marketers and product managers

These are often time-starved professionals managing complex products – purification kits or sequencing platforms – with limited internal writing support. For them, AI can accelerate content creation, helping convert a technical white paper into a blog or a set of slides into social media posts.

But that’s not all. Tools like AlphaSense, Marketbeam, and Perplexity AI also support market research and competitor monitoring. They can track product launches, analyze regulatory filings, or scan publications and competitor websites to spot emerging trends. Used strategically, these tools offer biotech marketers a real-time edge in positioning and messaging.

Still, the outputs are only as good as the prompts – and the interpretation. If the tool isn’t guided by someone who understands both the product and the audience, the results often miss the mark or lack actionable nuance. AI can synthesize what’s out there, but only humans can interpret what matters for their unique context.

For freelance science writers

Some clients now assume AI can replace freelancers. But that only holds for surface-level tasks. When it comes to crafting accurate, context-rich content – especially for novel technologies or nuanced use cases – experienced writers are still essential. AI might generate text, but it can’t interpret conflicting data, incorporate client feedback, or ensure brand-voice consistency.

For product end-users

Lab users comparing reagents or instruments online may use AI tools for research. However, product specs aren’t always structured in AI-friendly ways, which can lead to misleading comparisons. If one vendor’s description is vague or poorly optimized, it may be omitted from the search entirely, regardless of product quality.

For investors and lay stakeholders

Most investors aren’t molecular biologists. They rely on concise, benefit-focused communication to evaluate new technologies. But AI doesn’t know when a USP is too vague or when a benefit is overhyped. Biotech companies that use AI without editorial control risk alienating funders who value clarity over marketing fluff.

For patients and the public

In medical and biotech comms, the stakes are higher. Patients seeking treatment options or reading about vaccine development need accessible, accurate explanations. AI may help distill complex language – but without human review, it can also oversimplify or distort.

The freelance dilemma: what AI can do (and what it can’t)

Some clients now question the need for freelance writers: “If AI can write the content, why pay someone to do it?” It’s a fair question but it misses the point.

In practice, AI can be a fantastic starting point. It’s useful for overcoming writer’s block, outlining posts, or reformatting existing content. For instance, I’ve used AI to draft initial versions of our own blog posts – especially helpful when client work takes priority. And tools like DeepL are fantastic at creating translations. But those drafts are never final. They require shaping, checking, and, often, rewriting.

This is especially true in life sciences. AI doesn’t evaluate whether a claim is overstated, whether a publication is relevant, or whether a feature truly matters to the intended user. Hallucinated references, vague generalizations, and off-brand tone are common – and potentially costly. The time saved in drafting can be lost (or worse, cause reputational risk) if the content goes out unchecked.

This is where human-AI collaboration in science writing becomes essential. Writers who understand the science and the audience can use AI to move faster – without compromising accuracy or credibility. That’s what makes freelance science communicators more valuable, not less, in the AI era.

Three client mindsets and how they shape AI use

In biotech content projects, expectations around AI use vary dramatically. Over the past year, I’ve noticed three distinct client mindsets, each with its own risks and requirements.

1. “Don’t use AI – we don’t trust it”

Some teams, particularly those operating in regulated environments or with negative prior experiences, explicitly request that AI tools are not used at any stage of the content creation process. This is entirely valid. They may be concerned about data privacy, factual errors or losing their original voice.

2. ‘Please use AI – but use it smartly.’

This group expects AI to be part of the workflow, but not the final authority. They recognize its value in terms of faster drafts, cleaner formatting, and getting a head start on structure. But they also understand the limitations. AI can generate language; it can’t evaluate nuance, accuracy, or relevance in a scientific or strategic context.

These clients often speak from experience. They have tested AI tools themselves and are aware of their shortcomings. What they want is synthesis: AI for speed and humans for sense-making. This makes for productive collaboration: they’re pragmatic and efficiency-minded, yet still committed to quality.

3. “We don’t need freelancers any more – AI can do it all”

This group has a different opinion. Often led by non-specialists, they overestimate what AI can deliver, particularly in scientific fields. They assume that a language model can replace subject-matter expertise, regulatory awareness, and audience-specific framing.

In practice, this rarely holds up. But it’s a belief that’s gaining ground and one that freelancers increasingly have to navigate.

The risk here is that content goes out unreviewed – packed with oversimplified claims, misinterpreted data, or hallucinated references. In life sciences, that’s more than a bad look – it can damage trust with technical audiences.

The fatal flaw: content without real-life anchors

If AI-generated content has one signature weakness, it’s this: it reads well and sounds great, but says nothing.

Without grounding in real experiences – customer feedback, user data, lab workflows, or stakeholder conversations – AI content often drifts into abstraction. It keeps repeating what’s already been said, and assembles “readable” sentences that are ultimately forgettable.

In biotech, audiences expect specificity. AI doesn’t know what matters in your client’s market unless you tell it. And it can’t reference things that only happened in internal meetings or real-world testing. That’s why content without real-life anchors is more than just boring – it’s a liability.

A Personal Note – Why I Joined the “MI” Network

I joined the Menschliche Intelligenz (MI) network to explore the ethical and practical implications of AI in real time. We share studies, question assumptions, and keep each other grounded. Because tools are evolving fast, and we owe it to ourselves and our clients to stay informed.

Respect the tool, respect the task

One of the most overlooked risks in AI-assisted writing is confidentiality. Responsible freelancers know to never paste proprietary, patent-related, or sensitive content into AI tools. Prompts should be stripped of client identifiers and unpublished details. Most tools store data on US-based servers, which can pose compliance issues in the EU and beyond.

So here’s the bottom line: AI can assist your work, but it can’t replace your expertise – or your professional ethics.

Let’s keep the human intelligence in science communication

If you’re navigating the shift toward AI-assisted content – and wondering how to do it responsibly – I’d love to compare notes. Whether you’re a biotech marketer adapting your workflows, a freelancer redefining your value, or a founder needing credible content under pressure, we all benefit from thoughtful collaboration.

Need a second pair of expert eyes on your AI-generated draft? Looking to build a content strategy that balances speed with substance? If you’re building out your content strategy – or figuring out what role AI should play in it – we’re here to help.

Let’s talk.

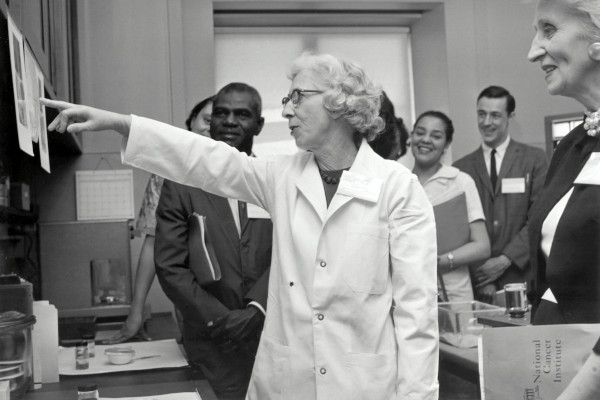

Image: National Cancer Institute on Unsplash

- Using AI for biotech and scientific translation – with human precision - November 11, 2025

- AI in science communication: help or hype? - October 6, 2025

- How an outside look can help you develop a better biotech USP - August 4, 2025